First a primer

At ShareChat, everyday, we deal with data of the order of billions & trillions of rows. To store, access & fetch this data for our analysis purposes, we use GCP’s BigQuery. A huge chunk of the annual compute costs we incur can be attributed to BigQuery alone - which includes BQ services like physical storage, Analysis (querying), BQ APIs, Streaming inserts etc.

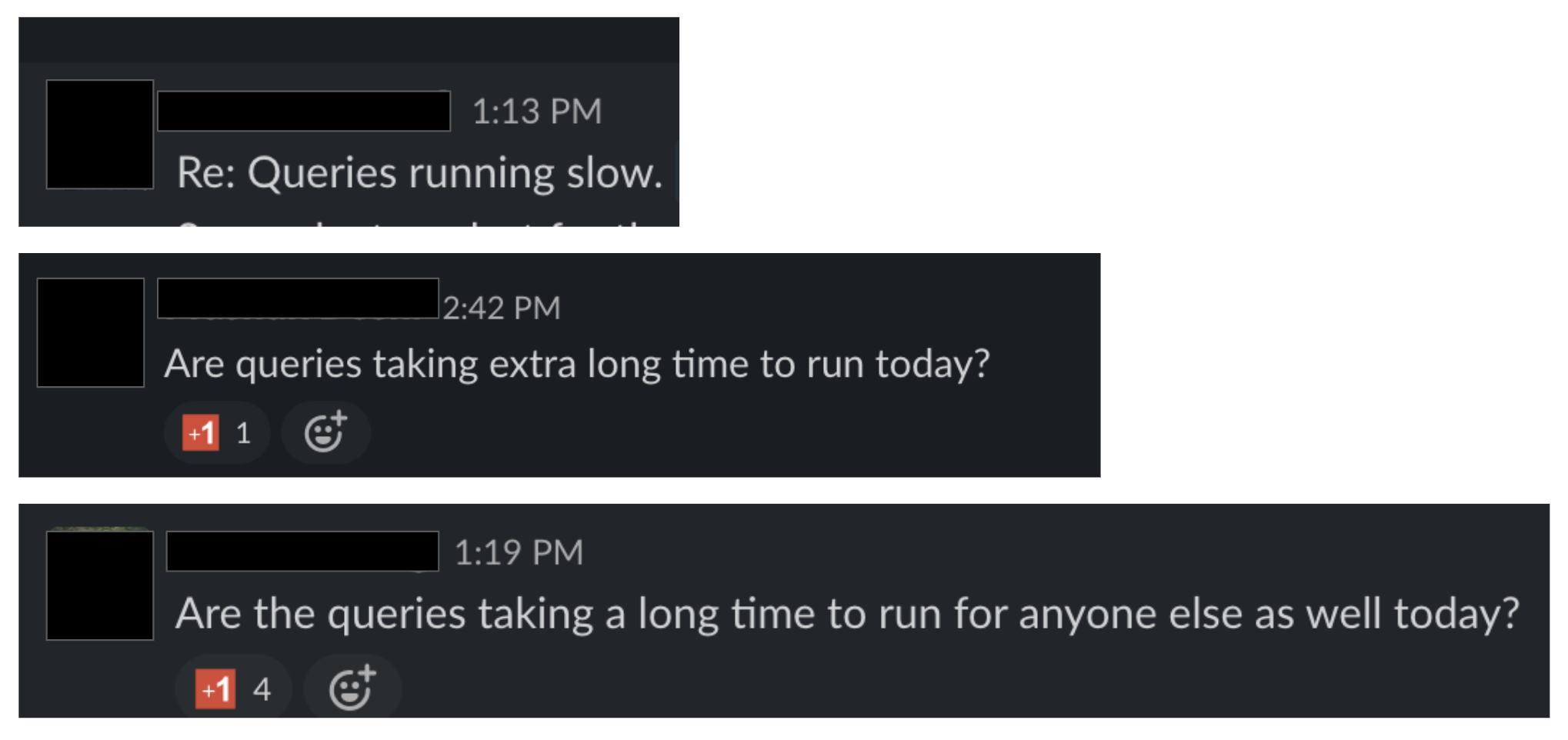

As an org, we’ve been using BigQuery for quite some time but as our team kept growing, our use of BigQuery services also increased. And we found ourselves dealing with frequent fires around BigQuery that would need immediate extinguishment. What did our problems look like? Here’s a trailer ss

For the first few times we encountered issues like these, chaos accompanied. We would then go to GCP support.

------

Us: Our BQ queries are taking too long to run, what can be the likely issues?

Support: Increase slots

Us: Ok! (but…)

The realization

We kept on increasing the slots because it would temporarily solve the issue. But purchasing slots added a lot of cost to our compute, which was already expensive. And with the rate of growth of data, access & people, was this scalable and(or) sustainable?

HELL NO!

That’s when we realized that this is a recurring fire that needs an autoscaling solution.

We want to solve for the optimization problem:

- Idle Load condition: If we keep increasing Flat Rate slots, more often than not we will have these idle load conditions. We’re paying for what we’re not using

- Peak Load condition: We don’t want slower query SLAs, timeouts, fails etc. We don’t want our system to get choked because of some p99 queries

As the demand increases, our engines should be able to handle the load by spinning off more slots, and when the demand falls back to normal, the extra slots should be deprovisioned. We can engineer a way of doing the same, by leveraging BigQuery’s flex slots!

We can’t keep increasing our slots on an ad-hoc basis and we also can’t let our costs compound with time. It is high time we put the fire extinguishing mechanism on autopilot mode.

\

Enter Flex Slots to the rescue

- What are slots, even? How do they work?

Slots are the virtual CPUs that do the computation in our queries. We can do a monthly/annual Flat Rate commitment to purchase x slots. Thus we pay the price of x slots for a month/year upfront. (Flat Rate is preferred as the costs are lower than on-demand costs).

But if our compute demand is more than our total slot capacity, we will definitely face some down time + delays etc.

- What are Flex Slots?

Flat Rate can be too much to commit for, so if we intend to leverage flat rate, trading off with the commitment time, flex slots can help. These can be bought for a min duration of 60 secs. Flex Slots are perfect for organizations with business models that are subject to huge shifts in data capacity demands. So essentially, we can autoscale using Flex Slots !!

But first, some Analysis + Planning

- How often do your slots go unused below capacity?

- How often do you find BQ battling with peak load? (slower query runtime, slower SLAs, more timeouts, fails etc)

If “often” is the answer to both the questions, then it’s time to look for ways to use our slots more efficiently.

So what did our to do look like when we started?

-

“You can’t manage what you don’t measure” - We started analyzing slot usage trends by building out Slot Usage Monitoring Dashboards to understand our system better.\We also started asking questions like:

- At what time are max slots being used? - Peak load condition

- At what time are our slots mostly idle? - Idle Load condition

- Does our “jobs scheduled time” match our peak load time? And so on.

- Knowing our teams, people and workloads: Identify how their queries/jobs can be written better. This is when we started scanning for query anti-patterns & started calling out those heavy P99 queries that would choke the entire system.

- Identifying idle slots time periods (time periods in which there exist idle slots which aren’t being used). This was a guide to better scheduling.

Some Trigger Points on Flex Slots 👀

- Flex Slots are costlier than Flat rate slots but cheaper than on-demand. For the same workload, flex slots cost 0.33x of on-demand.

- Flex slots are subject to capacity availability. There can be some latency in purchase/provisioning of flex slots.

- Flex Slots need to be exclusively “deleted / de-provisioned”. Else, they stay in the system until we delete them - costing us even when we’re not using them!!

Let’s do this!! All possible Approaches?

This problem can be solved by combining two individual methodologies:

- Core Engg way of Autoscaling

- Predictive scheduling (Time Series - predicting peak load)

Using a Time Series DS algorithm, we will forecast peak loads ahead of time. This will help us identify the time periods when to provision flex slots & when to deprovision. We will use the DS approach output to programmatically spin off flex slots whenever peak load is predicted. But some peak loads will come out of the blue - unpredicted. So we will engineer a way of automating provision/de-provisioning of flex slots when demand is sensed.

TLDR: Our systems will buy flex slots when increased demand is predicted/sensed, and it will deprovision flex slots when load is back to normal.

Our planned workflow looked somewhat like this

Our Approach

-

Doing a dry run to establish POC

- We programmed a BQ Alert for a particular project & set the alert to a base threshold value. Every time the slot usage is above the threshold (e.g. 5000 slots), the alert will be triggered.

- The BQ alert will send push notification to our pub/sub channel. (Apart from pub/sub as a notif channel, as a good-to-have, we’ve also tried & tested sending alerts to Slack channel for easy reference.)

- The pub/sub will receive an alert & send trigger alert to a cloud function. Based on the type of alert received, the cloud function will purchase (provision)slots, assign slots to the project and delete (de-provision) slots.

- A long term solution for tracking auto scaling would be to save logs in a BQ table/DB. All the data related to triggers, purchases, assignments, deletion can be stored in a DB.

- Testing: Auto purchase = provisioning+assigning+deprovisioning should be happening in real time. Chalk out testing params for determining accuracy & load control, and for measuring latency.

-

Tackle Time series problem statement - Predict Peak Loads (DS oriented)

- Define a simple baseline approach - Any method proposed should be able to beat baseline by reasonable margin.

- Define evaluation metric (Could be linked directly to cost saving from optimal prediction of spin-off time instance & switch-off time instance)

- Outcome: Forecast peak loads ahead of time. This will help us identify the time periods when to provision flex slots & when to deprovision.

- Real Optimization: Integrating DS Time series Approach + Engg approach of programmatically provisioning & deprovisioning flex slots. When high demand/peak load is sensed by the system (or forecasted by DS algo), we provision flex slots, assign them to particular projects and deprovision when demand is back to normal.

- Scaling for DAGs (Airflow jobs)

DAGs are the heavy data intensive + compute intensive jobs that are potential chokers for our systems. These are the most problematic jobs that require most resources and are usually scheduled for some particular time in the day, so we can scale flex slots for DAGs as per the requirements. - Plotting granular visualizations

The current visualizations on BQ console -> Monitoring are accurate with very low latency but it’s always good to have Project wise Slot Usage Monitoring Dashboards set up which plot data on a very granular level with ~0 latency.

There!! Since BigQuery doesn’t provide an autoscaling solution for slots, we engineered a solution for this :)

While we eagerly wait for BQ to come up with an Autoscaling solution, this will work in the meantime 🤓